Automating Discrimination: The Unseen Risk in Government Data

Algorithmic Bias:

The Ultimate Procurement & Risk Management Failure

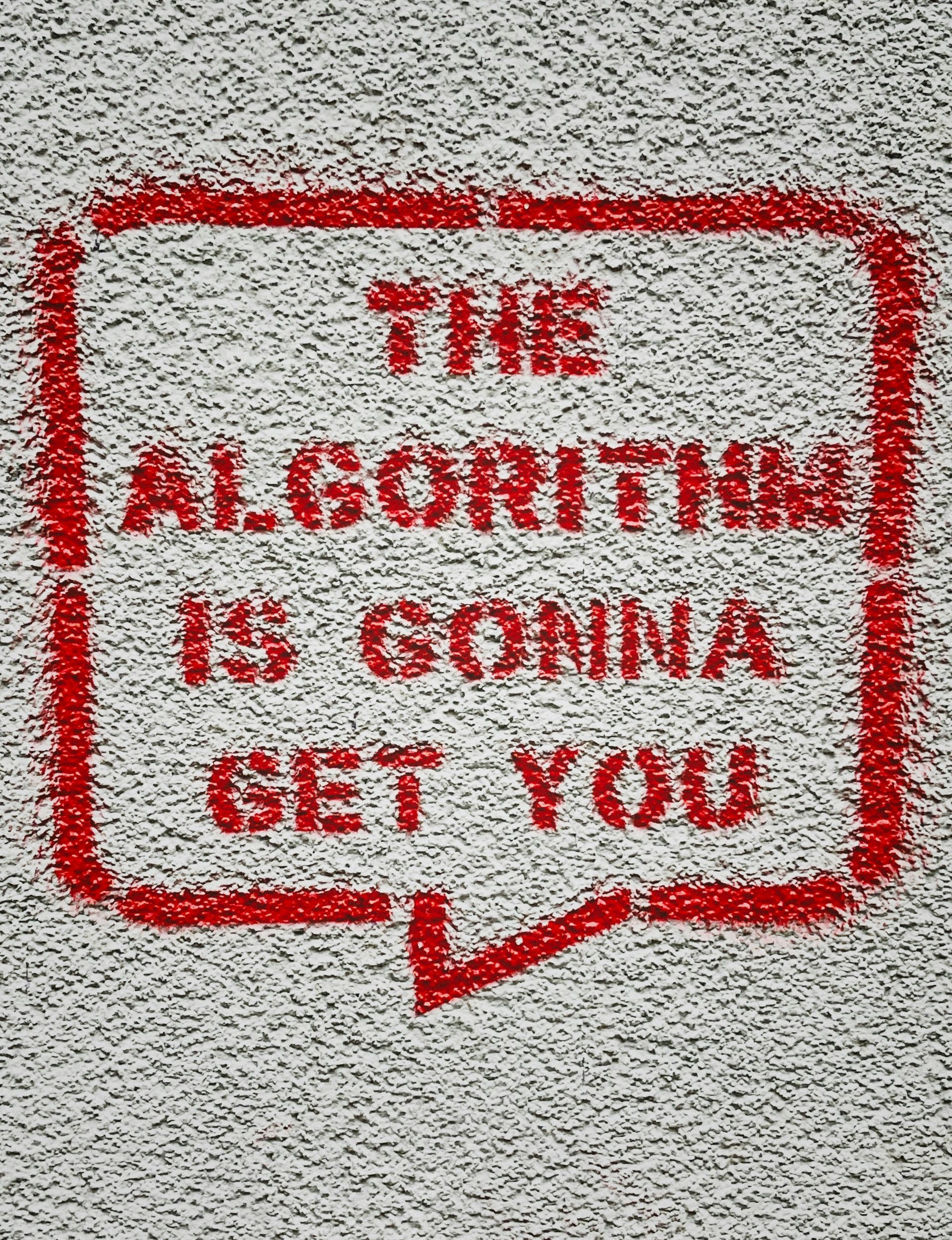

The promise of Artificial Intelligence in government is compelling, but AI merely automates and amplifies existing human processes. Failing to audit for bias of the underlying training data (which directly shapes the AI's logic) and the resulting decision-making model, risks simply (but potentially devastatingly) automating any human-level discrimination . . . at industrial scale.

Algorithmic bias is a clear and present danger that needs to be understood by public sector decision-makers, contract managers, and technology policy teams. For these parties, the danger is amplified because the ultimate, legal liability for discriminatory, non-transparent decisions falls directly onto the public agency, not on the technology itself.

As procurement agencies adopt AI's tools for high-stakes decisions (from resource allocation to predictive policing), the risk of algorithmic bias represents an unseen, massive legal and ethical liability.

But before looking at how it occurs, it's essential to be crystal clear on what it is: Algorithmic bias is the systematic and unfair discrimination inherent in a computer system's results.

It manifests when an AI's system produces results that consistently favour or penalise one group over another, even if the user never explicitly programmed the bias.

How Algorithmic Bias Occurs

Algorithmic bias occurs when the historical prejudices present in the training data (data often reflecting historic societal inequities) are amplified by the AI's system, leading to unequal or unfair outcomes for specific demographic groups.

By way of example, a system designed to allocate resources for social services might learn from data that certain neighbourhoods have historically received a lesser level of government investment, leading the AI to systematically under-allocate resources to those same areas today.

Or, by way of another example, in a hiring tool, if historical hiring data demonstrates a favouring of male applicants, the AI will learn this bias and continue to penalise female applicants, even if all other qualifications are equal.

Profound Risk Management Failure

We're not talking merely about isolated coding errors - which are addressable technical issues.

We're talking about profound, systemic risk management failure compounded by a lack of transparency - a serious management liability.

This is how it works (or perhaps more aptly put, doesn't work):

A lack of transparency means the process is a "black box".

If an agency uses a biased AI system to deny a housing application, and the agency cannot explain why the system reached that decision, the agency has failed its legal and moral duty.

While, on the one hand, this turns the AI into a legal liability shield - a way for the agency to claim, "The computer did it", and skirt accountability for a discriminatory outcome - a responsible agency and any forward-thinking senior management personnel will clearly see the increasing magnification of what would now be baked in as a systemic issue for that organisation.

Accountability Should Be Integrated into the Procurement Process

The risk management failure stems from neglecting to mandate accountability and auditability, which - critically - should be integrated directly into the procurement process.

This means that the required technical controls - the ability to trace a decision back to its source and verify its fairness - must be written into the Request for Proposal (RFP) and the final contract.

By signing a contract without these provisions, the agency legally ties its hands, making it impossible to enforce ethics after deployment.

The Implications for Procurement Policies & Processes

The moral of the story for procurement leaders and vendors is that the focus must shift from simply evaluating speed, to rigorously auditing the AI’s social and ethical impact. And that starts with explicit, mandatory requirements for auditability and accountability written directly into the RFP.

This must sit as a pre-requisite for deployment, assessed before technical speed or cost.

The ramifications of integrating Artificial Intelligence trained on undetected disparate, biased data, into agency decision-making processes, are immediate and extreme: from loss of public trust all the way through to legal challenge under anti-discrimination laws, and political backlash.

Radical, Auditable Transparency: Nothing Less Will Do

Clearly, it's about prioritising prevention as a strategy, over cure.

At agency level, there must be auditable transparency of all data sources.

At the vendor's end, they need to "open the black box" and allow the agency client access to the model's decision-making architecture or "reasoning" i.e. the ability to track why the model made a decision (explainability) and to verify the data that was used to teach the system.

Without an explicit review of the training data and the detection of any and all potential for unfair weighting of different factors in the decision-making process, any government AI initiative runs the risk of violating anti-discrimination laws and eroding public trust.

Securing full contractual accountability requires mandatory, verifiable standards to become non-negotiable for all contracts and policy implementation.

Who Bears the Burden of Obligation? The Vendor’s Mandate

It's a common error for public sector agencies to assume that the responsibility for ethical performance resides solely within their own compliance team.

In the case of AI accountability, this assumption is flawed. While the agency bears the ultimate public liability for the system's discriminatory outcomes, the burden of obligation - the capacity to demonstrate ethical compliance - must be contractually held by the vendor.

The vendor is the only party that owns the intellectual property (IP) for the AI model, which includes access to the proprietary training data, algorithmic weights, and the decision logic - the core elements of the "black box".

The government buys the right to use the software, but the vendor retains control over its internal mechanics.

Therefore, for the procurement contract to be legally sound, the agency must make the vendor contractually liable for proving the system's ethical integrity. Without this specific clause, the government assumes the entire legal risk for a system it cannot inspect.

Mandatory Contractual Mechanisms

To manage this risk, the RFP and the final contract must contain clear and specific provisions that mandate that the vendor provides the necessary tools and documentation for compliance.

The rationale for placing this burden on the vendor is simple: the vendor possesses the technical superiority to manage the risk.

While the agency owns the historically flawed data, the vendor is the expert in machine learning, and they alone can detect, measure, and build mitigation strategies for biases.

The government's contract must stipulate that the vendor is responsible for these key actions:

- Audit Tools and Explainability: The vendor must deliver specific mechanisms that allow the agency's auditors to track the AI's decision path (explainability). This is the technical capacity to trace a decision back to its source data.

- Bias Reporting and Data Provenance: The vendor must warrant that the training data has been subjected to a rigorous bias assessment and must provide the results. Furthermore, they must fully document and trace the source of all training data to ensure it meets public sector standards for completeness and impartiality.

By mandating these provisions, the government shifts the vendor's role from simply selling technology to an agency, to guaranteeing ethical accountability. If the vendor cannot provide these contractual guarantees, the agency must not sign the contract.

This final check is the only way to ensure the public's trust remains intact and that Artificial Intelligence serves as a tool for genuine progress, not automated injustice.